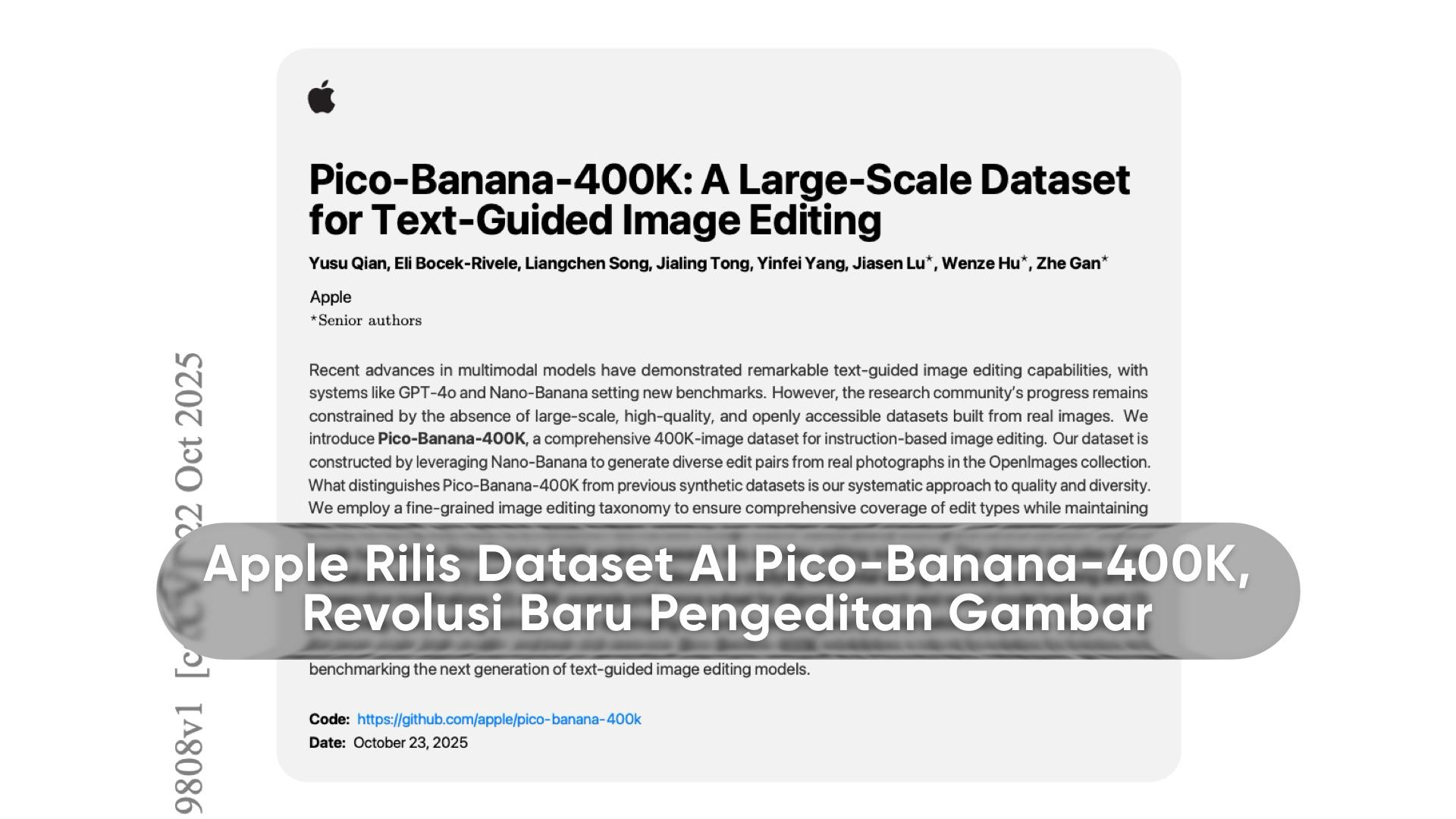

Apple officially launches Pico-Banana-400K, a new AI dataset designed to train artificial intelligence models to understand and edit images using only text commands. Pico-Banana-400K marks a major step by Apple in research AI multimodalIntroducing an innovative approach to natural language-based image editing.

This dataset contains 400,000 data pairs Between the photos and editing instructions, making it one of the largest visual datasets ever released publicly by Apple.

Text-Guided Image Editing Revolution

Apple describes Pico-Banana-400K as a dataset that enables AI models to learn to execute text instructions into real visual changes. For example, users only need to type commands such as change day into night or Make the face look like a Pixar character., and the AI system can generate editing results as requested.

This step marks a major shift in the world of digital editing that previously required manual intervention or complex software. With this new approach, Apple positions AI as a creative partner that understands the context of human language.

Components and Dataset Architecture

Pico-Banana-400K was developed using Apple's internal technology named Nano-Banana, an AI model that automatically performs image editing. The edited results from Nano-Banana were then evaluated by Gemini 2.5 Pro, an advanced evaluation system that assesses quality, realism, and conformity to the initial instructions.

This dataset includes several key elements:

Multi-Turn Sequences

as many as 72,000 multi-turn sequences Included to train AI in understanding incremental or sequential editing. For example, first changing the color of the sky, then adding night lighting effects, up to changing the background. This approach brings the model's way of working closer to the way humans think in the creative process.

Preference Pairs and Two-Version Instructions

In addition, there is 56,000 preference pairs, namely the comparison between good editing results and poor editing results. This component is important to help an AI model recognize the optimal quality of the edited result. Each data item is also accompanied by two forms of instruction: a long version written like a technical guide for model training, and a short version that resembles the user's everyday language.

Apple's Research Objectives and Direction

With Pico-Banana-400K, Apple reaffirms its commitment to development. AI multimodal that is able to understand text, images, and context simultaneously. This dataset will help researchers and developers build models that can add or remove objects from photos, change the lighting, adjust the mood, or even imitate a specific visual style such as photorealism or Pixar animation.

Moreover, the use of real data rather than synthetic data becomes Apple's main differentiator. By using real images, the results of AI training are expected to be more natural and accurate in capturing the visual nuances of the real world.

Open Source License on GitHub

Pico-Banana-400K was released in a open source on GitHub under an Apple research license. That means, academics, researchers, and the AI developer community around the world can access, study, and use this dataset for experiments and training their own visual-text models.

Many circles refer to this step as ImageNet's image editing., referring to the legendary dataset that once sparked great progress in the field of computer vision. With the availability of large-scale, high-quality data, Apple is seen as opening up new opportunities to accelerate research in visual generative AI.

Great Potential for the Creative and Design Industry

The release of Pico-Banana-400K is expected to have a major impact on the creative industry. Designers, photographers, and digital artists can make use of the AI model trained on this dataset to speed up the editing and visual exploration process.

Impact on Visual Creators

This AI model trained using this dataset is able to understand human language commands more intuitively. A creator can ask the system to “add sunlight from the left” or “change the clothing to red” without having to manually edit in the design software.

Synergy with the Apple ecosystem

Apple is most likely to integrate this capability into its ecosystem, such as apps. Photos, Final Cut Pro, or even Vision Pro, their flagship mixed reality headset. Thus, Apple users can experience a natural language-based editing experience that is intuitive and futuristic.

Analisis: Strategi Apple di Dunia AI Multimodal

This move by Apple is in line with the major trend in the technology industry that focuses on AI multimodal, where the system not only understands text or images, but the combination of both.

Companies such as Google and OpenAI have previously developed similar models such as Gemini dan GPT-4o that is able to process text, images, and even audio simultaneously. However, Apple's approach that emphasizes open datasets for research places it on a different path, more oriented toward academic collaboration and exploration of data quality than simply the speed of commercialization.

Academic and Research Impact

With an open license, universities and research laboratories now have access to industry-scale datasets that can enhance the quality of scientific publications and experiments with multimodal models. Researchers can also measure the extent to which an AI model can understand complex instructions that reflect the expression of human language.

Community Reactions and AI Observers

The global AI community warmly welcomes this move by Apple. Many researchers regard Pico-Banana-400K as one of the most comprehensive visual-text datasets ever released by a major tech company.

Observers from various research institutions describe it as a strategic move to strengthen Apple's position in open AI research. "This dataset shows Apple's seriousness in building a scientific foundation for AI, not just a product," said one of the researchers from the Stanford AI Lab in an interview with TechCrunch.

Meanwhile, tech analysts say that Apple is trying to strengthen its reputation as a company that focuses on data quality and privacy, two values that have become their hallmark over the years.

The Future: Towards a New-Generation Visual-Language AI Model

With the release of Pico-Banana-400K, Apple is setting the stage for a new generation of AI models that not only understand human language, but also are able. to be creative visually. A model like this will be at the heart of a wide range of future applications, from automated design and film production to mixed-reality-based creative assistants.

An AI integration capable of understanding complex commands such as "create a morning atmosphere with soft light in a Tokyo garden" will open new frontiers in digital creativity.

In addition, the collaboration between Apple and the research community through open licenses will accelerate the advancement of innovation across the global technology ecosystem.

Pico-Banana-400K is not just a dataset, but a symbol of Apple's ambition to bridge human language with visual creativity through artificial intelligence. With 400,000 pairs of image data and instructions, and open access for the global community, Apple once again demonstrates its ability to push the boundaries of technological innovation.

This step reinforces the future direction of AI: a system that can understand, imagine, and create visuals from text alone. The world of image editing is no longer merely a matter of tools, but about a new way of thinking in the era of artificial intelligence.

Discover more from Insimen

Subscribe to get the latest posts sent to your email.